tl;dr It's a timer that injects itself into the game, which allows the script to run exactly on each frame, making timing more accurate. If you're interested to test, source code is available at github https://github.com/3RD-Maple/SpeedTool download is available on itch https://3rdmaple.itch.io/speedtool . Please note that the project is still a WIP; some things might be broken, buggy, or missing. I would appreciate any feedback or contribution!

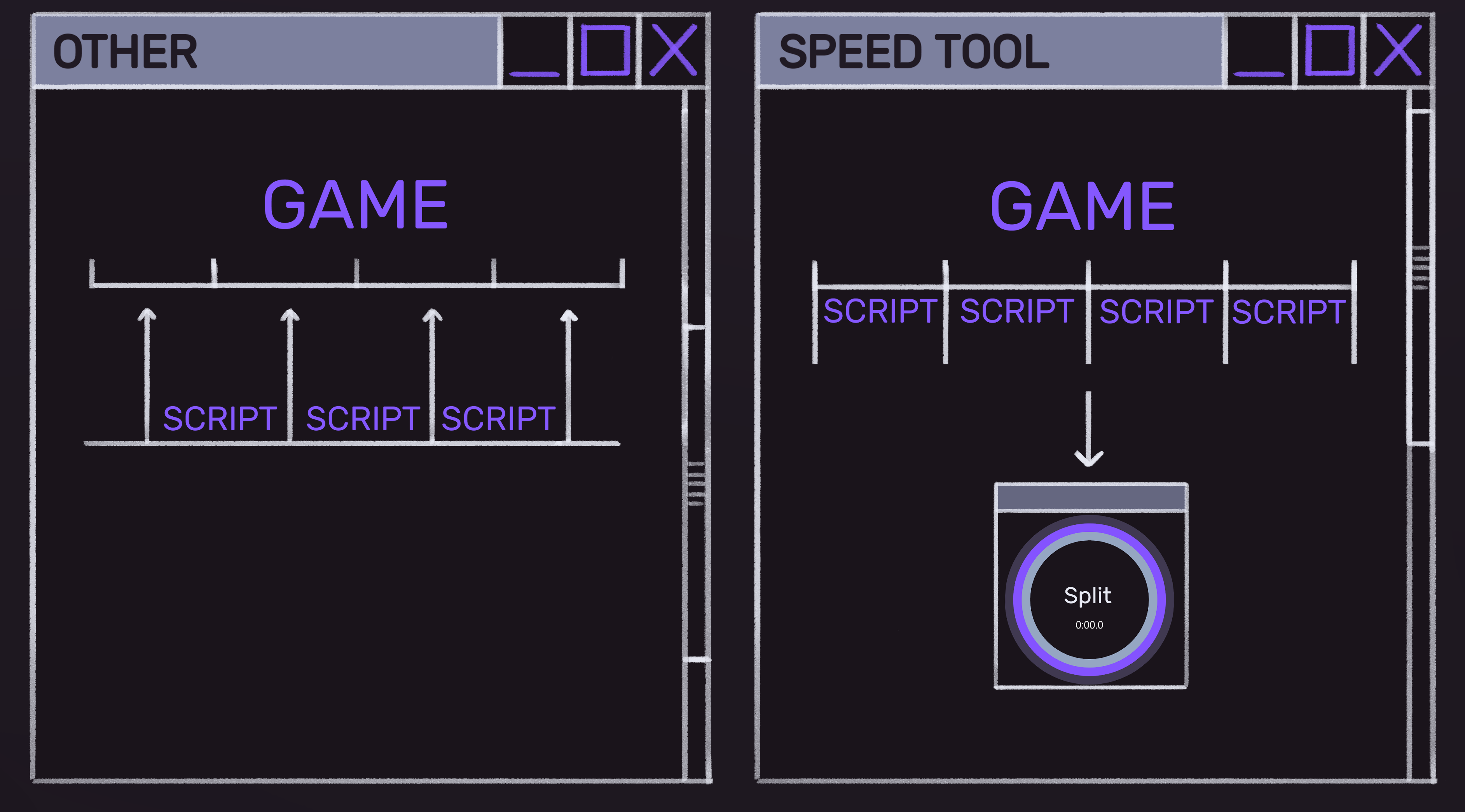

Now let me explain. I had a few gripes with LiveSplit for quite a while now, and I finally figured out a way to improve some of the things. First, let's talk about how scripting works in LiveSplit. ASL is a language that's transpiled to C# code, which then runs on a background thread with (I believe) 120 Hz refresh rate. This has a few implications. First of all, ASL allows for any C# code to be executed, which is unsafe. If you don't pay attention to what you run as an ASL script, it can potentially do harm to your PC. Either intentionally, or unintentionally. Second of all, that background thread is not necessarily in perfect synchronization to the game it runs for. It's possible for it to start executing script inbetween frames, adding a few milliseconds to the inaccuracy, or to completely miss some of the loading screens if they are short enough and the game runs on much higher refresh rate. There are also OS timing issues, which make background thread approach a little inconsistent. In my timer, SpeedTool, the script is injected into the target game. It then hijacks the graphics API function that presents a game frame to the screen, and executes your script at that time. So it will achieve perfect synchroniztion with the game's refresh rate no matter what. In a sense, it works similar to how FPS counter, or any other, overlay works :) Here's a fancy pic to help illustrate the difference.

As for the safety aspect, I used LUA sandbox to run scripts in a safe environment that can only do harmless things. It should be impossible to do any harm with a script this way. Regarding user interface, I tried doing something different from the usual wall-of-text approach of conventional speedrunning timers. It's a default display option, and I do hope you like it. However, a "Classic" interface option is available in case you want something more familiar.

I tried coming to this project with a fresher look on how things are, therefore SpeedTool might feel different. For one, I moved away from "Splits" idea to a "Game" idea, which combines splits, categories, scripts, and other necessary stuff into a single Game file. I believe it should be easier to share pre-made solutions this way. A side effect of this is I didn't implement per-split personal bests YET, as it gets complicated trying to match splits from game file to saved personal best, especially when something changes. On a plus side, I will create game vaults that will have full run history, with splits and everything.

Did I already mention that this timer runs natively on Linux too? It should work on MacOS as well, but I have no way of testing it, as I don't own any of the Mac hardware. Unfortunately, the scripting part only works on Windows, as I have no idea how to approach dll injection on other systems. If you do - please, consider making a PR :) Scripting should work with OpenGL, DirectX 9, and DirectX 11 games. I will add DirectX 10 and 12 support in some near future (or, you can make a PR if you beat me to it). Please note that injection might be impossible for some games, due to anti-cheat measures, game-specific implementation quirks, or because it's a wrong Moon phase.

As a specific bonus, splits structure allows for infinite subsplit nesting - you can now make as many subsplit levels as you want. I know we all secretly wanted that ;)

Some of the future plans include:

- Runs vault, to store full speedrunning history

- Automatic Game file loading, depending on what game is currently open

- Web source capture support for OBS

- More display options

- More customisation options

All of this said, I do hope you enjoy and find this idea to be useful. I'd appreciate any feedback, idea, or contribution. Let's make a great new timer!

George, please, relogin. As for your question, no, that would be modifying a game, which is not allowed in speedrunning. Thus we won't be able to allow such a run, even if we did - only as a category extension which do not exist :)

@Tigger77 I'm using a pretty standart solution: GoogLeNet via Caffe. The reasoning behind that is it was the only solution that worked out of the box and doesn't require a lot of hardware to use - in worst case scenario, it could be easily ran on CPU. I'm not that deep into the fancy theory behind all the architectures and stuff, so please forgive me my ignorance :)

I'm feeding individual frames and using float [0; 1] as a result - loading screens usually stands out and when it is a loading screen, the result is usually closer to 1 than anything else. For now most actual loading screens have >0.96 output and I take an advantage of that.

We [me and my coworkers] discussed the idea of feeding more than 1 frame, but it was quickly rejected as a GPU-heavy solution. After some talk we came to a conclusion that it would be best to see how a single frame would work and only then take action if necessary. And it seems like it isn't :)

About the classification, at first it was clear that the network struggled really hard when the loading screens differed. For example, if one loading screen would be notably different from another, it would only start recognizing one of them. I solved the issue by creating more classes (eg game, loading1, loading2 etc), and that seems to work really well - initially it gave me the most boost in having a working thing. In conclusion, it really matters to introduce as many classes as the network needs.

@Soulkilla thank you for a reply, but I would like to not share much at this point -- I don't enjoy sharing barely working things, it just sucks IMO. I don't want interference either, because merging could be a huge pain. I will eventually make a git repo when I'm sure the project is useable

@ShikenNuggets that is... actually a pretty dope idea! Just making some kind of timeline that the AI would "mark" with what it thinks a loading screen is so you can later on jump between those places and recheck everything manually. Taken into consideration, but it would take much (I mean MUCH) more time to implement. GUI is always a pain to work with.

Also an update: I hit in-second matches on NG+ runs, and any% runs are only 5 seconds off, making the current one 99.93% accurate, which is already close to ideal.

Oh, that thing, yeah. While it is possible to check for splices and cheating using AI, it sounds more like a Nobel Prize Winning Project than a srcom thread ^^

Thank you guys for your kind responses.

@oddtom Accuracy is indeed the #1 priority over anything else, but, unfortunately, we cannot really speak 100% accuracy because of some technical stuff that goes behind the scene - the result of neural network processing is not a "true/false" answer, but rather a probability of a certain case, like "98% sure it's a loading screen". Using statistics or brute force we can negate the effect of such error to a better side and reach false 100% accuracy, but technically speaking 100% acc is unreachable.

About the PC reqs... they are not as strict at some point: you need a moderate CPU + NVidia GPU (needs CUDA for calculations) combination for it to work, I'm using an i5-3470 and a GTX1050Ti and that is more than enough. GPU is the most valuable thing here obviously. I would like to test it on some other PCs as well, like using CPU only etc, etc... Oh, you also need Linux so far, but I plan on making a Windows variant as well for obvious reasons :) This PC allows scanning videos on about 3x speed of original, or having around 90 fps at average.

@ShikenNuggets about how much data is needed. tl;dr: more data is not always good. More detailed explanation below.

Currently, I use 16k train and 6k validation images, totaling at 22k images - that's a little bit more than a minute of 30fps video. Bigger's better, but over fitting is a huge problem - the AI can just "remember" all the input images and not really "learn" to tell those apart, I already had that happen on my test set of 22k images. More brain power is required to actually train it in the best way, which I'm trying to do at the moment.

And, to answer @MelonSlice , humans make mistakes as well. I don't consider human retimed times to be 100% accurate, as we at NFS already experienced issues when different people would have different results for same runs. It's kinda OK to make mistakes. With a neural network we can tell time with a certain margin of error - for example, we can say that "This run is 12:46 +- 1 second loadless". So, if that 1 second makes a difference, e.g. it would move a particular run up or down the leaderboard, it would require further investigation. Otherwise I don't see much problems with leaving it "as is" with a comment that "1 second margin of error can be present"

Hello there everyone.

Recently I started a project to train a neural network that would have a speedrun video as an input and that would output Time without loads. And it seems that I'm having a stunning success with it at this moment!

I've used Need for Speed: Carbon game as a baseline since I happen to mod it, and that it uses time without loads. Even though the network is not working completely as I intended so far, it manages to hit 1 second accuracy on any% NG+ runs, making it already useful for retiming runs in that particular category.

If there are any active mods reading this, I could use some of your feedback please. Particularly, I would like to know what would you expect from such a thing - PC requirments, accuracy, analysis speed etc. Would you think it will be acceptable to use AIs for such a task, given it has a good accuracy?

Also feel free to ask questions.